The script copies `ReleaseNotesTemplate.txt` to corresponding

`ReleaseNotes.rst`/`.md` to clear release notes.

The suffix of `ReleaseNotesTemplate.txt` must be `.txt`. If it is

`.rst`/`.md`, it will be treated as a documentation source file when

building documentation.

This adds download links to the GitHub release pages for common

platforms. The automatically built packages' links are automatically

revealed once the builds are complete. For packages built by hand,

hidden links are included in the text for release uploaders to reveal

later.

The approach taken:

* "LLVM x.y.z Release" becomes the title for this links section.

* Automatically built packages are commented out with special markers so

we can find them to uncomment them later.

* There is placeholder text for the time between release creation and

release tasks finishing.

* Hand built packages have release links but these will need to be

un-commented by release uploaders.

* I have used vendor names for the architectures, that casual users

would recognise.

* Their signature file is linked as well. I expect most will ignore this

but better to show it to remind people it exists.

* I called it "signature" as a generic term to cover the .jsonl and .sig

files. Instructions to use these were added to the text in a previous

change.

Background:

https://discourse.llvm.org/t/rfc-explaining-release-package-types-and-purposes/85985

So that users can understand which they should use, particularly for

Windows. The original text about community builds is kept, after

explaining the main release package formats.

In addition, explain how to use gpg or gh to verify the packages.

Despite our attempt (build-docs.sh)

to build the documentation with SVG,

it still uses PNG https://llvm.org/doxygen/classllvm_1_1StringRef.html,

and that renders terribly on any high dpi display.

SVG leads to smasller installation and works fine

on all browser (that has been true for _a while_

https://caniuse.com/svg), so this patch just unconditionally build all

dot graphs as SVG in all subprojects and remove the option.

If `clang-cl.exe` and `lld-link.exe` are installed in `%PATH%`, the

Windows release build script will now use these by default, in place of

MSVC. The reason for doing this is that MSVC still has, for the past

year(s), a O(N^2) behavior when building certain LLVM source files,

which leads to long build times (minutes per file). A report was filled

here:

https://developercommunity.visualstudio.com/t/ON2-in-SparseBitVectorBase-when-com/10657991

Also added a `--force-msvc` option to the script, to use MSVC even if

clang-cl is installed.

Recently GitHub changed something on their side so we no longer can

rebase release PR's with the API. This means that we now have to

manually rebase the PR locally and then push the results. This fixes

the script that I use to merge PRs to the release branch by changing

the rebase part to do the local rebase and also adds a new option

--rebase-only so that you can rebase the PRs easier.

Minor change is that the script now can take a URL to the pull request

as well as just the PR ID.

Line ending policies were changed in the parent, dccebddb3b80. To make

it easier to resolve downstream merge conflicts after line-ending

policies are adjusted this is a separate whitespace-only commit. If you

have merge conflicts as a result, you can simply `git add --renormalize

-u && git merge --continue` or `git add --renormalize -u && git rebase

--continue` - depending on your workflow.

When using bsd sed that ships with macOS on the object files for

comparison, every command would error with

```

sed: RE error: illegal byte sequence

```

This was potentially fixed for an older version in

6c52b02e7d0df765da608d8119ae8a20de142cff but even the commands in the

example there still have this error. You can repro this with any binary:

```

$ sed s/a/b/ /bin/ls >/dev/null

sed: RE error: illegal byte sequence

```

Where LC_CTYPE appears to no longer solve the issue:

```

$ LC_CTYPE=C sed s/a/b/ /bin/ls >/dev/null

sed: RE error: illegal byte sequence

```

But this change with LC_ALL does:

```

$ LC_ALL=C sed s/a/b/ /bin/ls >/dev/null; echo $?

0

```

It seems like the difference here is that if you have LC_ALL set to

something else, LC_CTYPE does not override it. More info:

https://stackoverflow.com/a/23584470/902968

This was obsoleted by Visual Studio providing built-in support for

running clang-format in VS2017.

We haven't shipped it for years (since

10d2195305ac49605f2b7b6a25a4076c31923191), never got it working with

VS2019, and never even tried with VS2022.

It's time to retire the code.

because that doesn't work (results in `LINK : error LNK2001: unresolved

external symbol malloc`).

Based on the title of #91862 it was only intended for use in 64-bit

builds.

This script helps the release managers merge backport PR's.

It does the following things:

* Validate the PR, checks approval, target branch and many other things.

* Rebases the PR

* Checkout the PR locally

* Pushes the PR to the release branch

* Deletes the local branch

I have found the script very helpful to merge the PR's.

The old version in the llvm/actions repo stopped working after the

version variables were moved out of llvm/CMakeLists.txt. Composite

actions are more simple and don't require javascript, which is why I

reimplemented it as a composite action.

This will fix the failing abi checks on the release branch.

This patch adds support in the bump-version script for bumping the

version of the mlgo-utils package. This should hopefully streamline the

processor for that with the rest of the project and prevent having to

manually update this package individually.

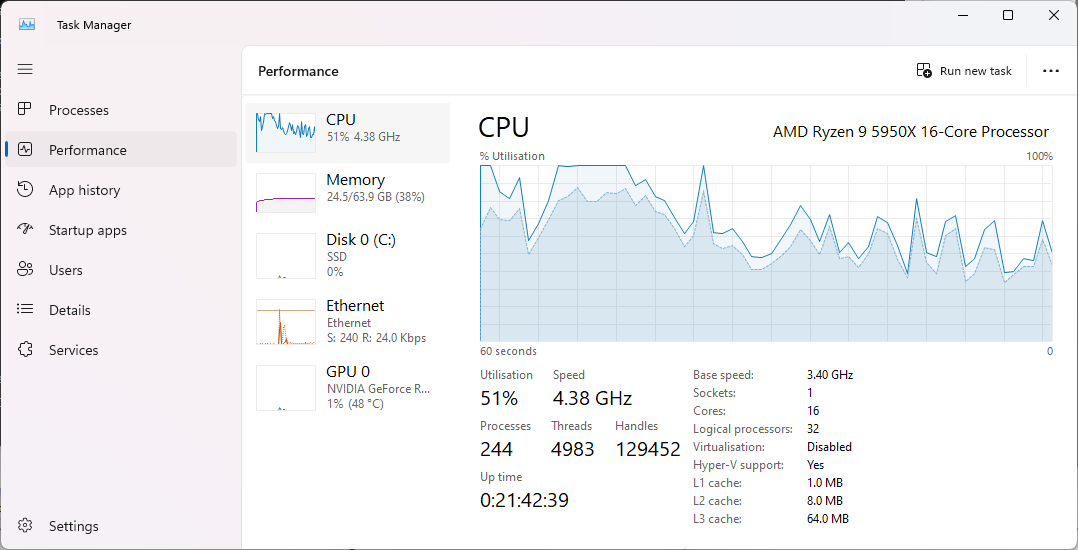

### Context

We have a longstanding performance issue on Windows, where to this day,

the default heap allocator is still lockfull. With the number of cores

increasing, building and using LLVM with the default Windows heap

allocator is sub-optimal. Notably, the ThinLTO link times with LLD are

extremely long, and increase proportionally with the number of cores in

the machine.

In

a6a37a2fcd,

I introduced the ability build LLVM with several popular lock-free

allocators. Downstream users however have to build their own toolchain

with this option, and building an optimal toolchain is a bit tedious and

long. Additionally, LLVM is now integrated into Visual Studio, which

AFAIK re-distributes the vanilla LLVM binaries/installer. The point

being that many users are impacted and might not be aware of this

problem, or are unable to build a more optimal version of the toolchain.

The symptom before this PR is that most of the CPU time goes to the

kernel (darker blue) when linking with ThinLTO:

With this PR, most time is spent in user space (light blue):

On higher core count machines, before this PR, the CPU usage becomes

pretty much flat because of contention:

<img width="549" alt="VM_176_windows_heap"

src="https://github.com/llvm/llvm-project/assets/37383324/f27d5800-ee02-496d-a4e7-88177e0727f0">

With this PR, similarily most CPU time is now used:

<img width="549" alt="VM_176_with_rpmalloc"

src="https://github.com/llvm/llvm-project/assets/37383324/7d4785dd-94a7-4f06-9b16-aaa4e2e505c8">

### Changes in this PR

The avenue I've taken here is to vendor/re-licence rpmalloc in-tree, and

use it when building the Windows 64-bit release. Given the permissive

rpmalloc licence, prior discussions with the LLVM foundation and

@lattner suggested this vendoring. Rpmalloc's author (@mjansson) kindly

agreed to ~~donate~~ re-licence the rpmalloc code in LLVM (please do

correct me if I misinterpreted our past communications).

I've chosen rpmalloc because it's small and gives the best value

overall. The source code is only 4 .c files. Rpmalloc is statically

replacing the weak CRT alloc symbols at link time, and has no dynamic

patching like mimalloc. As an alternative, there were several

unsuccessfull attempts made by Russell Gallop to use SCUDO in the past,

please see thread in https://reviews.llvm.org/D86694. If later someone

comes up with a PR of similar performance that uses SCUDO, we could then

delete this vendored rpmalloc folder.

I've added a new cmake flag `LLVM_ENABLE_RPMALLOC` which essentialy sets

`LLVM_INTEGRATED_CRT_ALLOC` to the in-tree rpmalloc source.

### Performance

The most obvious test is profling a ThinLTO linking step with LLD. I've

used a Clang compilation as a testbed, ie.

```

set OPTS=/GS- /D_ITERATOR_DEBUG_LEVEL=0 -Xclang -O3 -fstrict-aliasing -march=native -flto=thin -fwhole-program-vtables -fuse-ld=lld

cmake -G Ninja %ROOT%/llvm -DCMAKE_BUILD_TYPE=Release -DLLVM_ENABLE_ASSERTIONS=TRUE -DLLVM_ENABLE_PROJECTS="clang" -DLLVM_ENABLE_PDB=ON -DLLVM_OPTIMIZED_TABLEGEN=ON -DCMAKE_C_COMPILER=clang-cl.exe -DCMAKE_CXX_COMPILER=clang-cl.exe -DCMAKE_LINKER=lld-link.exe -DLLVM_ENABLE_LLD=ON -DCMAKE_CXX_FLAGS="%OPTS%" -DCMAKE_C_FLAGS="%OPTS%" -DLLVM_ENABLE_LTO=THIN

```

I've profiled the linking step with no LTO cache, with Powershell, such

as:

```

Measure-Command { lld-link /nologo @CMakeFiles\clang.rsp /out:bin\clang.exe /implib:lib\clang.lib /pdb:bin\clang.pdb /version:0.0 /machine:x64 /STACK:10000000 /DEBUG /OPT:REF /OPT:ICF /INCREMENTAL:NO /subsystem:console /MANIFEST:EMBED,ID=1 }`

```

Timings:

| Machine | Allocator | Time to link |

|--------|--------|--------|

| 16c/32t AMD Ryzen 9 5950X | Windows Heap | 10 min 38 sec |

| | **Rpmalloc** | **4 min 11 sec** |

| 32c/64t AMD Ryzen Threadripper PRO 3975WX | Windows Heap | 23 min 29

sec |

| | **Rpmalloc** | **2 min 11 sec** |

| | **Rpmalloc + /threads:64** | **1 min 50 sec** |

| 176 vCPU (2 socket) Intel Xeon Platinium 8481C (fixed clock 2.7 GHz) |

Windows Heap | 43 min 40 sec |

| | **Rpmalloc** | **1 min 45 sec** |

This also improves the overall performance when building with clang-cl.

I've profiled a regular compilation of clang itself, ie:

```

set OPTS=/GS- /D_ITERATOR_DEBUG_LEVEL=0 /arch:AVX -fuse-ld=lld

cmake -G Ninja %ROOT%/llvm -DCMAKE_BUILD_TYPE=Release -DLLVM_ENABLE_ASSERTIONS=TRUE -DLLVM_ENABLE_PROJECTS="clang;lld" -DLLVM_ENABLE_PDB=ON -DLLVM_OPTIMIZED_TABLEGEN=ON -DCMAKE_C_COMPILER=clang-cl.exe -DCMAKE_CXX_COMPILER=clang-cl.exe -DCMAKE_LINKER=lld-link.exe -DLLVM_ENABLE_LLD=ON -DCMAKE_CXX_FLAGS="%OPTS%" -DCMAKE_C_FLAGS="%OPTS%"

```

This saves approx. 30 sec when building on the Threadripper PRO 3975WX:

```

(default Windows Heap)

C:\src\git\llvm-project>hyperfine -r 5 -p "make_llvm.bat stage1_test2" "ninja clang -C stage1_test2"

Benchmark 1: ninja clang -C stage1_test2

Time (mean ± σ): 392.716 s ± 3.830 s [User: 17734.025 s, System: 1078.674 s]

Range (min … max): 390.127 s … 399.449 s 5 runs

(rpmalloc)

C:\src\git\llvm-project>hyperfine -r 5 -p "make_llvm.bat stage1_test2" "ninja clang -C stage1_test2"

Benchmark 1: ninja clang -C stage1_test2

Time (mean ± σ): 360.824 s ± 1.162 s [User: 15148.637 s, System: 905.175 s]

Range (min … max): 359.208 s … 362.288 s 5 runs

```

Before this fix, when building the Windows LLVM package with the latest

cmake 3.29.3 I was seeing:

```

C:\git\llvm-project>llvm\utils\release\build_llvm_release.bat --version 19.0.0 --x64 --skip-checkout --local-python

...

-- Looking for FE_INEXACT

-- Looking for FE_INEXACT - found

-- Performing Test HAVE_BUILTIN_THREAD_POINTER

-- Performing Test HAVE_BUILTIN_THREAD_POINTER - Failed

-- Looking for mach/mach.h

-- Looking for mach/mach.h - not found

-- Looking for CrashReporterClient.h

-- Looking for CrashReporterClient.h - not found

-- Looking for pfm_initialize in pfm

-- Looking for pfm_initialize in pfm - not found

-- Could NOT find ZLIB (missing: ZLIB_LIBRARY ZLIB_INCLUDE_DIR)

CMake Error at C:/Program Files/CMake/share/cmake-3.29/Modules/FindPackageHandleStandardArgs.cmake:230 (message):

Could NOT find LibXml2 (missing: LIBXML2_INCLUDE_DIR)

Call Stack (most recent call first):

C:/Program Files/CMake/share/cmake-3.29/Modules/FindPackageHandleStandardArgs.cmake:600 (_FPHSA_FAILURE_MESSAGE)

C:/Program Files/CMake/share/cmake-3.29/Modules/FindLibXml2.cmake:108 (FIND_PACKAGE_HANDLE_STANDARD_ARGS)

cmake/config-ix.cmake:167 (find_package)

CMakeLists.txt:921 (include)

-- Configuring incomplete, errors occurred!

```

It looks like `LIBXML2_INCLUDE_DIRS` (with the extra 'S') is a result

variable that is set by cmake after a call to `find_package(LibXml2)`.

It is actually `LIBXML2_INCLUDE_DIR` (without the 'S') that shold be

used as a input before the `find_package` call, since the 'S' variable

is unconditionally overwritten, see

https://github.com/Kitware/CMake/blob/master/Modules/FindLibXml2.cmake#L96.

I am unsure exactly why that worked with older cmake versions.

After aa02002491333c42060373bc84f1ff5d2c76b4ce we started passing the

user name to the create_release function and this was being interpreted

as the git tag.

Like linux releases, export a tar.xz files containing most llvm tools,

including non toolchain utilities, llvm-config, llvm-link and others.

We do this by reconfiguring cmake one last time at the last step,

running the install target so we do not need to recompile anything.

Fix#51192Fix#53052

The default GitHub token does not have read permissions on the org, so

we need to use a custom token in order to read the members of the

llvm-release-managers team.

As described in [test-release.sh ninja install does builds in Phase

3](https://github.com/llvm/llvm-project/issues/80999), considerable

parts of Phase 3 of a `test-release.sh` build are run by `ninja

install`, ignoring both `$Verbose` and the parallelism set via `-j NUM`.

This patches fixes this by not specifying any explicit build target for

Phase 3, thus running the full build as usual.

Tested on `sparc64-unknown-linux-gnu`.

The current test-release.sh script does not install the necessary

compiler-rt builtin's during Phase 1 on AIX, resulting on a

non-functional Phase 1 clang. Futhermore, the installation is also

necessary for Phase 2 on AIX.

Co-authored-by: Alison Zhang <alisonzhang@ibm.com>

* Split out the lit release job and the documentation build job into

their own workflow files. This makes it possible to manually run these

jobs via workflow_dispatch.

* Improve tag/user validation and ensure it gets run for each release

task.

Add two new flags to the release script:

`--skip-checkout` builds from the local source folder, instead of the downloaded source package

`--local-python` uses whichever local Python version is installed, instead of a specific version (3.10)

If the LLVM source is already in `C:\git\llvm-project` then one can do in a admin prompt:

```

C:\git>llvm-project\llvm\utils\release\build_llvm_release.bat --version 18.0.0 --x64 --skip-checkout

...

```

This allows for iterating more easily on build issues, just before a new LLVM package is made.

Also fix some warnings on the second stage build (`-Wbackend-plugin`).

You can now pass the -use-cmake-cache option to test-release.sh and it

will use a predefined cache file for building the release. This will

make it easier to reproduce the builds and add other enhancements like

PGO or bolt optimizations.

---------

Co-authored-by: Konrad Kleine <konrad.kleine@posteo.de>

Compiler-rt built-ins are needed to have a functional Phase 1 clang

compiler on AIX. This PR adds compiler-rt to the runtime_list during Phase 1 to avoid

this problem.

---------

Co-authored-by: Alison Zhang <alisonzhang@ibm.com>

Doxygen documentation takes very long to build, when making releases we

want to get the normal documentation up earlier so that we don't have to

wait for doxygen documentation.

This PR just adds the flag to disable doxygen builds, I will then later

make a PR that changes the actions to first build the normal docs and

another job to build the doxygen docs.

While doing a test-release.sh run on FreeBSD, I ran into a sed error due

to the introduction of the GNU extension '\s' in commit 500587e23dfd.

Scanning for blanks (spaces and tabs) could be done in a more portable

fashion using the [[:blank:]] character class. But it is easier to avoid

the original problem, which is that the projects and runtime lists have

to be separated by semicolons, and cannot start with a semicolon.

Instead, use the shell's alternate value parameter expansion mechanism,

which makes it easy to append items to lists with separators in between,

and without any leading separator. This also avoids having to run sed on

the end result.

In addition, build any selected runtimes in the second phase, otherwise

the third phase can fail to find several symbols in compiler-rt, if that

has been built. This is because the host's compiler-rt is not guaranteed

to have those symbols.

Reviewed By: tstellar

Differential Revision: https://reviews.llvm.org/D145884